We present Connector, a comprehensive graph representation learning framework developed primarily in Python using the PyTorch library. Connector Ver. 0.5 is a test release that will be further developed to enable researchers from different fields to apply graph representation learning models to their research.

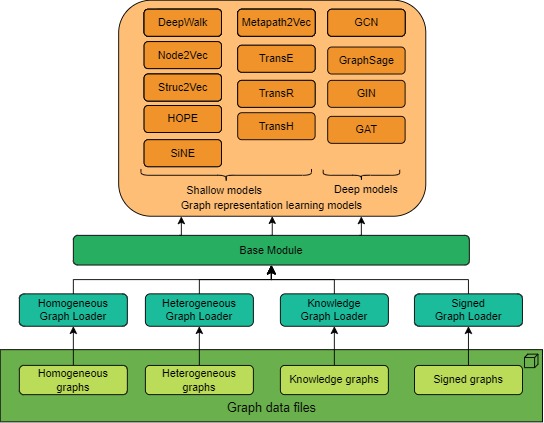

Connector consists of three main modules: graph data loaders, base model and graph representation learning modules. The graph loader modules introduce four graph loaders, namely homogeneous, heterogeneous, signed graph and knowledge graph loaders, which allow researchers to easily handle different types of graphs. By loading graph data, researchers can build and manipulate datasets with different file structures. The base model module includes different types of neural network layers, aggregators and sampling techniques for graphs to build common tasks such as loading models and loading/saving parameters.

Using the data loaders and the base model, our framework supports various graph embedding models, including thirteen implemented or modified models such as DeepWalk, Node2Vec, Struct2Vec, HOPE, SiNE, Metapath2Vec, TransE, TransR, TransH, GCN, GraphSage, GIN and GAT. Users can easily modify the implemented models by adding/removing/modifying layers.

Furthermore, we aim to introduce deep graph embedding models that understand the graph structure, such as local/global positional embedding and structural embedding. Positional embedding captures the relative and absolute positions of nodes in the graph, which are not represented in node features. To capture graph structure, we plan to integrate powerful graph neural networks (GNNs) and graph transformer models into Connector.

The Connector framework is available at:

Cite “Connector” as:

Please cite our paper if you find Connector useful in your work:

@misc{nguyen2023connector,

title={Connector 0.5: A unified framework for graph representation learning},

author={Thanh Sang Nguyen and Jooho Lee and Van Thuy Hoang and O-Joun Lee},

year={2023},

eprint={2304.13195},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

![]()

![]()

![]()

![]()

![]()