We present UGT, a novel Graph Transformer model specialised in preserving both local and global graph structures and developed by NS Lab, CUK based on pure PyTorch backend.

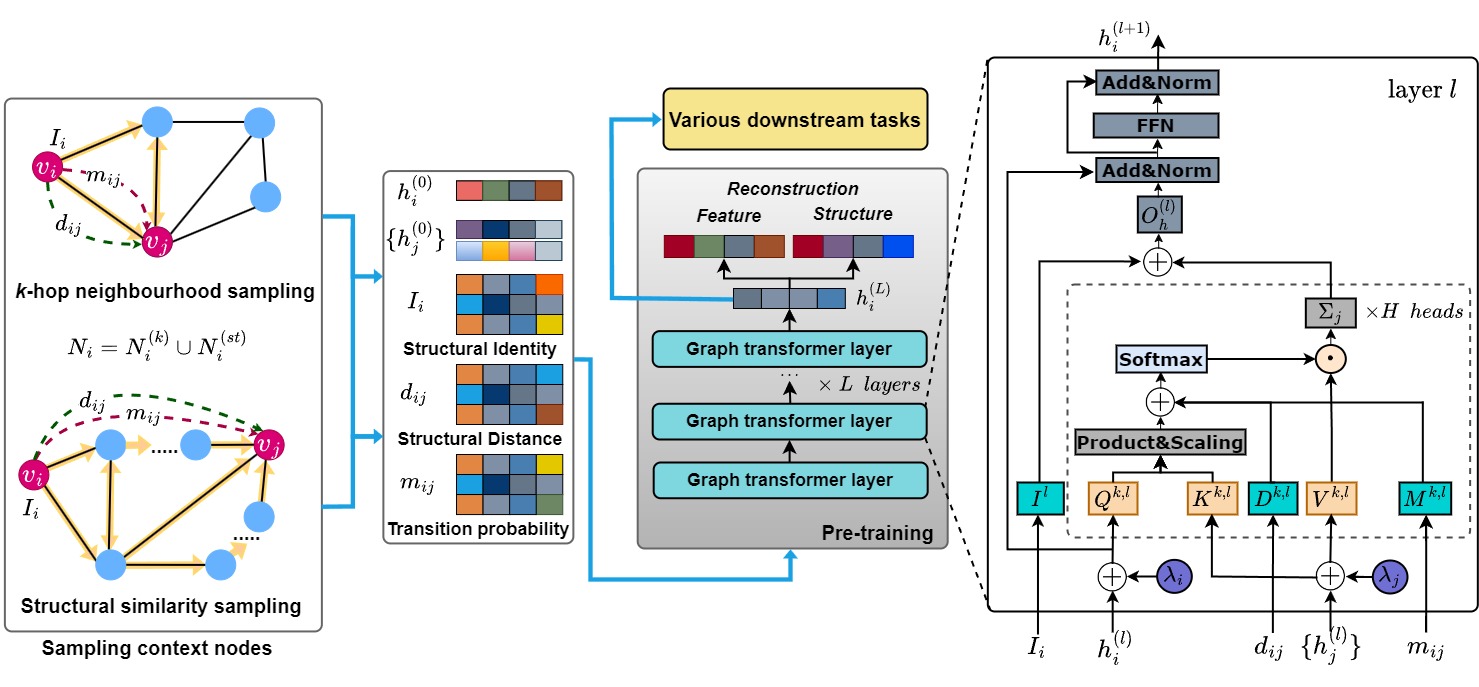

The overall architecture of Unified Graph Transformer Networks.

Over the past few years, graph neural networks and graph transformers have been successfully used to analyze graph-structured data, mainly focusing on node classification and link prediction tasks. However, the existing studies mostly only consider local connectivity while ignoring long-range connectivity and the roles of nodes. We propose Unified Graph Transformer Networks (UGT) that effectively integrate local and global structural information into fixed-length vector representations. UGT learns local structure by identifying the local substructures and aggregating features of the k-hop neighborhoods of each node. We construct virtual edges, bridging distant nodes with structural similarity to capture the long-range dependencies. UGT learns unified representations through self-attention, encoding structural distance and p-step transition probability between node pairs. Furthermore, we propose a self-supervised learning task that effectively learns transition probability to fuse local and global structural features, which could then be transferred to other downstream tasks. Experimental results on real-world benchmark datasets over various downstream tasks showed that UGT significantly outperformed baselines that consist of state-of-the-art models. In addition, UGT reaches the third-order Weisfeiler-Lehman power to distinguish non-isomorphic graph pairs.

A short description of UGT:

- UGT could learn both local and global structural information and fuse them into unified representations.

- UGT capture the long-range dependencies between distant nodes as long as they are structurally similar. To do that, UGT constructs virtual edges, bridging the distant nodes with structural similarity.

- UGT captures local structure through structural identity in addressing local non-isomorphic substructures and finding similar ones.

- UGT, a self-supervised learning framework for graphs, that bridges the conceptual gap between local and global structural features by preserving transition probabilities between nodes in multi-scales.

- Experiments on fifteen publicly available datasets over various downstream tasks demonstrate the superiority of UGT over SOTA baselines.

- UGT reaches the third-order Weisfeiler-Lehman (3d-WL) power to distinguish non-isomorphic graph pairs.

The UGT is available at:

Cite “UGT” as:

Please cite our paper if you find UGT useful in your work:

@InProceedings{Hoang_2024,

author = {Hoang, Van Thuy and Lee, O-Joun},

booktitle = {Proceedings of the 38th AAAI Conference on Artificial Intelligence (AAAI 2024)},

title = {Transitivity-Preserving Graph Representation Learning for Bridging Local Connectivity and Role-Based Similarity},

year = {2024},

address = {Vancouver, Canada},

editor = {Michael Wooldridge and Jennifer Dy and Sriraam Natarajan},

month = feb,

number = {11},

pages = {12456--12465},

publisher = {Association for the Advancement of Artificial Intelligence (AAAI)},

volume = {38},

doi = {10.1609/aaai.v38i11.29138},

issn = {2159-5399},

url = {https://doi.org/10.1609/aaai.v38i11.29138},

}

@misc{hoang2023ugt,

title={Transitivity-Preserving Graph Representation Learning for Bridging Local Connectivity and Role-based Similarity},

author={Van Thuy Hoang and O-Joun Lee},

year={2023},

eprint={2308.09517},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

![]()

![]()

![]()

![]()

![]()