We present CGT, a novel Graph Transformer model specialised in mitigating degree biases in Message Passing mechanism and developed by NS Lab, CUK based on pure PyTorch backend.

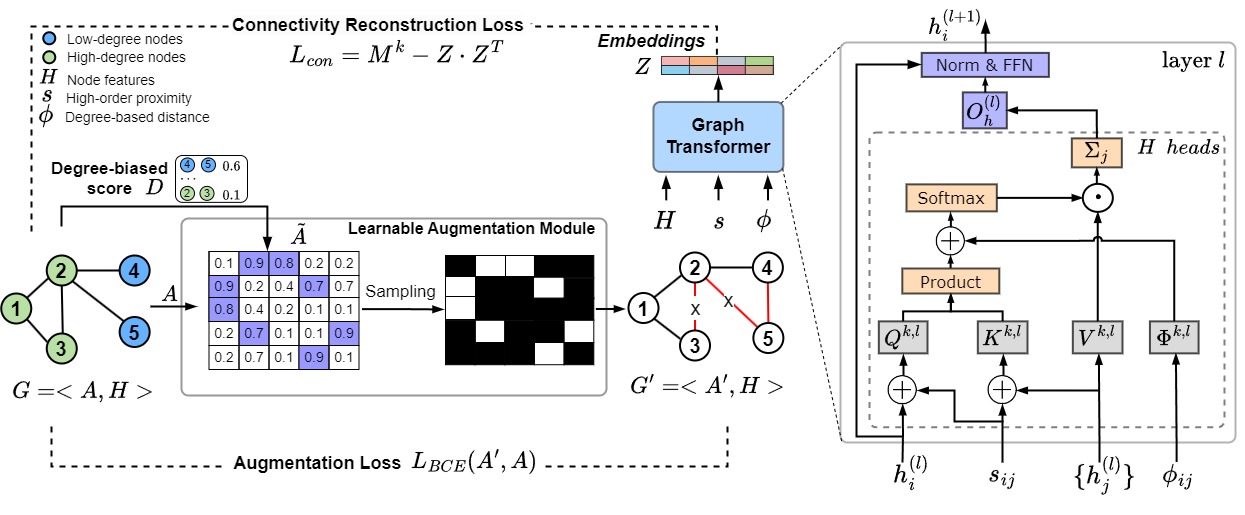

The overall architecture of Community-aware Graph Transformers.

We utilize community structures to address node degree biases in message-passing (MP) via learnable graph augmentations and novel graph transformers. Recent augmentation-based methods showed that MP neural networks often perform poorly on low-degree nodes, leading to degree biases due to a lack of messages reaching low-degree nodes. Despite their success, most methods use heuristic or uniform random augmentations, which are non-differentiable and may not always generate valuable edges for learning representations. In this paper, we propose Community-aware Graph Transformers, namely CGT, to learn degree-unbiased representations based on learnable augmentations and graph transformers by extracting within community structures. We first design a learnable graph augmentation to generate more within-community edges connecting low-degree nodes through edge perturbation. Second, we propose an improved self-attention to learn underlying proximity and the roles of nodes within the community. Third, we propose a self-supervised learning task that could learn the representations to preserve the global graph structure and regularize the graph augmentations. Extensive experiments on various benchmark datasets showed CGT outperforms state-of-the-art baselines and significantly improves the node degree biases.

A short description of CGT:

- We propose the utilization of within-community structures in learnable augmentations to allow low-degree nodes to be sampled with high probabilities via edge perturbation.

- We propose a novel graph transformer with improved self-attention that takes the augmented graph as input and encodes the within-community proximity into dot product self-attention and the roles of nodes. It is worth noting that we directly encode the high-order proximity into full dot product attention, which could enable CGT to discover the proximity information along with messages from neighborhoods to target nodes.

- We propose a self-supervised learning task to preserve graph connectivity and regularize the augmented graph data to generate the representations.

- Extensive experiments demonstrate that our model outperforms baselines on benchmark datasets and improves degree fairness.

The CGT is available at:

Cite “CGT” as:

Please cite our paper if you find CGT useful in your work:

@misc{hoang2023mitigating,

title={Mitigating Degree Biases in Message Passing Mechanism by Utilizing Community Structures},

author={Van Thuy Hoang and O-Joun Lee},

year={2023},

eprint={2312.16788},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

![]()

![]()

![]()

![]()

![]()